Professional Experience expand all

- 2024-present Meta AI, Research Scientist 2024-present

Making better recommendation systems.

- 2019-2024 Rice University, Ph.D. Researcher 2019-2024

Working on use of Machine Learning in Compilers (thesis). Developed several tools and techniques that deploy Reinforcement Learning, Large Language Models, and advanced search techniques in compilers. Additionally, working on development of HPCToolkit, large-scale profiling tool used widely accross US national labs. Implemented infrastructure for scalable GPU tracing, node-level metric tracing, performance counters, and various performance analysis.

- May 2023-Dec. 2023 Meta AI, Research Engineer Intern May 2023-Dec. 2023

Finetuning Llama2-based model on LLVM IR programs to solve phase-ordering problem. Developing Priority Sampling method that reaches the performance test data with 30 samples.

- May 2022-Dec. 2022 Meta AI, Research Engineer Intern May 2022-Dec. 2022

Developing deep reinforcement learning compiler for optimizing tensor operations.

- Jul. 2021-Oct. 2021 Berkeley Lab, Software Engineer Intern Jul. 2021-Oct. 2021

Profiling and analysis of power consumption on multi node GPU applications by using Nvidia NVML library.

- Aug. 2021 Argonne Lab, Argonne Training Program on Extreme-Scale Computing Aug. 2021

Hands-on tutorials on cutting-edge supercomputing ATPESC 2021.

- Jul. 2018-Oct. 2018 Rice University, Software Engineer Intern Jul. 2018-Oct. 2018

- Jul. 2017-Oct. 2017 Institute for High Performance Microelectronics, Hardware Engineer Intern Jul. 2017-Oct. 2017

Profiling and Analysis of FFT implementation on Xtensa Platform in C and theoretical analysis window functions.

- Jul. 2016-Oct. 2016 University of Novi Sad, Lab assistant Jul. 2016-Oct. 2016

Measuring and characterization of materials on micro-identer device

Education expand all

- 2024 PhD Computer Science, Rice University 2024

Thesis Optimizing Compiler Heuristics with Machine Learning Advisors John Mellor-Crummey, Aleksandar Zlateski and Chris Cummins Thesis focus on the use of Machine Learning in Compilers. First, we developed LoopTune, a reinforcement-learning-based framework for optimizing tensor computations, a core component of ML workloads. Second, we pioneered the use of Large Language Models (LLMs) in compiler optimization by predicting the sequence of LLVM optimization flags directly from LLVM-IR in text form. Third, Finally, we developed Unique Sampling, a simple deterministic sampling technique for LLM that produces unique samples ordered by the model’s confidence and outperforms the label’s performance with 30 samples. Additionally, developed infrastructure for scalable GPU profiling over many GPU nodes. Added support for measuring performance counters and node level metrics in HPCToolkit, as well as GPU-idleness analysis, which points to the cause of serialization in GPU code.

- 2018 MSc by Research, University of Novi Sad 2018

Grade 10/10 Thesis Finding Shortest Path in Dynamic Large-scale Graph, based on Lambda Architecture Advisors Vladimir Dimitrieski Developed the system for detecting the shortest path from multiple source in large-scale dynamic graph based on Lambda Architecture. Technologies used: Spark, HDFS, Kafka, Python Dash, Docker, Python

- 2018 Electronic Engineering and Computer Science, University of Novi Sad 2018

Grade 9.96/10 Thesis Hardware acceleration of chess engine Advisors Vuk Vrankovic FPGA implementation of chess board evaluation by following RTL methodology. Technologies used: C, SystemC, VHDL, SystemVerilog

Awards

- 2019 - 2020 Pollard Fellowship 2019 - 2020

- 2017 German government fellowship 2017

- 2017-2018 University of Novi Sad fellowship 2017-2018

- 2014 - 2019 Serbian government fellowship 2014 - 2019

Publications expand all

- 2024 Priority Sampling of Large Language Models for Compilers 2024

Authors Dejan Grubisic, Chris Cummins, Volker Seeker, Hugh Leather Publication Available on Arxiv We present Priority Sampling, a simple and deterministic sampling technique that produces unique samples ordered by the model’s confidence. Priority Sampling outperforms Nucleus Sampling for any number of samples, boosting the performance of the original model from 2.87% to 5% improvement over -Oz.

- 2023 Large Language Models for Compiler Optimization 2023

Authors Chris Cummins, Volker Seeker, Dejan Grubisic, Mostafa Elhoushi, Youwei Liang, Baptiste Roziere, Jonas Gehring, Fabian Gloeckle, Kim Hazelwood, Gabriel Synnaeve, Hugh Leather Publication Available on Arxiv We present a 7B-parameter LLaMa2-based model trained from scratch to optimize LLVM assembly for code size. Our approach achieves a 3.0% improvement in reducing instruction counts over the compiler with zero compilations, outperforming two state-of-the-art baselines that require thousands of compilations.

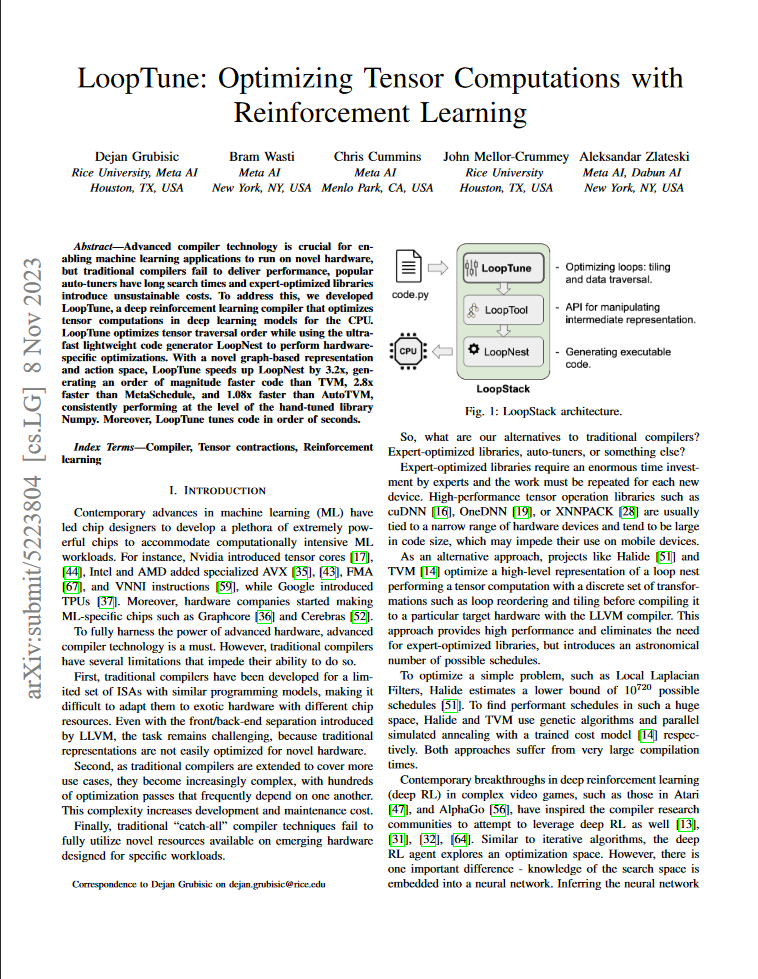

- 2023 LoopTune: Optimizing Tensor Programs with Reinforcement Learning 2023

Authors Dejan Grubisic, Bram Wasti, Chris Cummins, Aleksandar Zlateski Publication International Conference on Compiler Construction 24’ (Under submission) We present LoopTune, a deep reinforcement learning framework for optimizing tensor computations in deep learning models. LoopTune consistently exceeds the performance of traditional search-based algorithms, TVM, performing at the level of hand-tuned library Numpy.

- 2022 LoopStack: ML-friendly ML Compiler Stack 2022

Authors Bram Wasti, Dejan Grubisic, Benoit Steiner, Aleksandar Zlateski Publication Neural Information Processing Systems 22’ We present LoopStack, a domain-specific compiler stack for tensor operations. LoopStack is orders of magnitude faster than LLVM, while resulting in equal or improved run time performance, while defining predictable optimization space suitable for tuning with reinforcement learning.

- 2021 Measurement and Analysis of GPU-accelerated Applications with HpcToolkit 2021

Authors Keren Zhou, Laksono Adhianto, Jonathon Anderson, Aaron Cherian, Dejan Grubisic, Mark Krentel, Yumeng Liu, Xiaozhu Meng, John Mellor-Crummey Publication Parallel Computing Journal To address the challenge of performance analysis on the US DOE forthcoming exascale supercomputers, Rice University has been extending its HPCToolkit performance tools to support measurement and analysis of GPU-accelerated applications.

- 2021 Measurement and Analysis of GPU-Accelerated OpenCL Computations on Intel GPUs 2021

Authors Aaron Thomas Cherian, Keren Zhou, Dejan Grubisic, Xiaozhu Meng, John Mellor-Crummey Publication ProTools In this paper, we describe extensions to Rice University’s HPCToolkit performance tools that support measurement and analysis of Intel’s DPC++ programming model for GPU-accelerated systems atop an implementation of the industry-standard OpenCL framework for heterogeneous parallelism on Intel GPUs

- 2021 An Automated Tool for Analysis and Tuning of GPU-accelerated Code in HPC Applications 2021

Authors Keren Zhou, Xiaozhu Meng, Ryuichi Sai, Dejan Grubisic, John Mellor-Crummey Publication TPDS In this paper, we describe GPA, a performance advisor that suggests potential code optimizations at a hierarchy of levels, including individual lines, loops, and functions. To gather the fine-grained measurements needed to produce such insights, GPA uses instruction sampling and binary instrumentation to monitor execution of GPU code. sing GPA, we obtained speedups on a Volta V100 GPU ranging from 1.01x to 3.58x, with a geometric mean of 1.22x

- 2019 Sistem za pronalazenje najkrace putanje izmedju lokacija sa tezinama putanja promenjivim u realnom vremenu zasnovan na arhitekturi lambda 2019

Authors Dejan Grubisic Publication Zbornik radova Fakulteta tehničkih nauka u Novom Sadu Finding shortest path in dynamic multi source large-scale graph, based on Lambda architecture

Invited Talks expand all

- 2024 Optimizing Compiler Heuristics with Machine Learning, PhD Thesis Defense 2024

Duration 1 hour 13 minutes Demonstrating the use of machine learning in compilers, using reinforcement learning, large language models, and advanced techniques for sampling.

- 2024 Priority Sampling of Large Language Models for Compilers, EuroMLSys24 2024

Duration 13 minutes Presentation of Priority Sampling - a simple deterministic sampling technique for LLM that achieves suprisingly high performance.

- 2019 Finding Shortest Path in Dynamic Large-scale Graph, based on Lambda Architecture, Master's Thesis Defense 2019

Duration 45 minutes Implementing system for finding shortest path in dynamic graph suitable for big data.

- 2018 Hardware-acceleration-of-chess-engine, Bachelor's Thesis Defense 2018

Duration 45 minutes Implemenging FPGA component for accelerating evaluation of the board in chess engine.

- 2021 Monitoring and visualization of metric traces on GPU accelerated applications 2021

Duration 5 minutes Here we present the newly added features of monitoring power, temperature, and utilization on Nvidia GPUs in HPCToolkit.

Key Technical Skills

| C/C++ | |

| Python | |

| CudaC | |

| GNU / Linux | |

| Bash | |

| OpenMP/MPI | |

| VHDL | |

| Docker | |

| Java | |

| Spark | |

| Hadoop | |